For a FAANG System Design interview, they want structure, clarity, and confidence. What most candidates give is a half-baked architecture with too many question marks.

If that sounds familiar, you’re not alone. FAANG companies, including Facebook (Meta), Amazon, Apple, Netflix, and Google, don’t just test whether you can diagram a system. They want to see how you think. How you handle ambiguity. Whether you can design systems that scale to millions (or billions) of users while making smart trade-offs under pressure.

That’s exactly what this guide is for. Whether you’re designing a file storage system, a real-time chat app, or a news feed, the structure I’ll walk you through is what helped me, and many engineers I’ve mentored, go from overwhelmed to well-prepared. We’ll take a structured approach that mirrors what top engineers use every day in the real world.

What Are FAANG System Design Interviews Really Testing?

Let’s get this straight: FAANG System Design interviews aren’t about throwing buzzwords at a whiteboard. They’re designed to reveal how you solve problems at scale, how you navigate ambiguity, and how well you navigate trade-offs when there is no single “right” answer.

Here’s what your interviewer is really looking for:

1. Clarity of thought

They want to know: Can you break down a vague prompt into a concrete problem? Can you ask the right clarifying questions without waiting for a prompt? In a FAANG System Design interview, interviewers rarely hand you detailed specs. Your ability to uncover them shows product sense and engineering maturity.

2. Scalability mindset

You’re not designing for 10 users, but for 10 million, and maybe scaling to 100 million later. That means your choices around databases, caching, queues, and data consistency matter. The interviewer wants to see you consider growth and traffic patterns.

3. Trade-off analysis

You’ll rarely have time to build the perfect system. Do you prioritize availability over consistency? Optimize for write performance or read latency? FAANG engineers make decisions based on context, and you’re expected to do the same.

4. Communication and collaboration

You’ll need to communicate for impact and talk through ideas clearly, organize your thoughts visually, and adapt when your interviewer probes. In a FAANG System Design interview, it’s not just what you say—it’s how you say it.

5. Depth in areas that matter

A good interview balances breadth (understanding all components) and depth (digging into a few). You’re expected to go deep where it counts, like caching strategies, queue processing, or database partitioning.

So when people ask, “How do I crack the FAANG System Design interview?” my answer is simple: don’t memorize systems. Build a mindset. Practice structure. Develop judgment. That’s what FAANG companies are really testing.

8 Steps to Crack the FAANG System Design Interview

Step 1: Clarify the Problem

This is where many candidates trip up. They hear “Design Dropbox” and immediately start drawing boxes. Don’t. In a FAANG System Design interview, the most impressive thing you can do in the first 5 minutes is pause, think, and ask smart questions.

Let’s take an example: “Design a video streaming platform.”

Instead of diving into architecture, start by clarifying two key areas:

Functional Requirements

Ask things like:

- Should users just watch videos, or upload them too?

- Do we support live streaming or only on-demand?

- Are we building for web, mobile, or both?

- Is user authentication in scope?

You’re showing the interviewer that you think like a product owner as much as an engineer. That’s gold in a FAANG System Design interview.

Non-Functional Requirements

This is your chance to uncover the scale, performance, and reliability expectations:

| Requirement | Sample Questions to Ask |

|---|---|

| Scale | “How many daily active users are we targeting?” |

| Performance | “Is sub-second latency critical for search or playback?” |

| Availability | “What level of uptime are we aiming for?” |

| Consistency | “Is it okay if newly uploaded videos take time to appear?” |

| Security | “Do we need content-level permissions or geo-blocking?” |

When I coach engineers, I always say: your first 5 minutes should sound like a discovery call, not a TED Talk. In a FAANG System Design interview, asking sharp clarifying questions positions you as someone who builds systems with real-world context, not someone who just draws pretty diagrams.

Once you’ve clarified the problem space, only then should you say: “Great. Here’s how I’d approach the design.”

Step 2: Estimate Scale & Load

Once you’ve clarified the problem, it’s time to get quantitative. In a FAANG System Design interview, this is where you show you can think like an engineer working at scale, not someone building for a classroom project. Quick, thoughtful estimation tells your interviewer you’re grounded in reality and understand the implications of design choices.

Let’s say the prompt is: “Design a Twitter-like social feed.” Start with basic assumptions and walk through the math out loud:

- Monthly Active Users (MAUs): Let’s assume 100 million.

- Daily Active Users (DAUs): Roughly 10% of MAUs → 10 million.

- Peak Queries per Second (QPS): Suppose each user checks their feed 5 times/day on average.

- 10M users × 5 = 50M requests/day → ~600 QPS (averaged)

- Add headroom for peak traffic: Let’s plan for 10K QPS.

Now break down read/write ratios:

- Feed reads: 90%

- New post writes: 10%

This means that during peak load, your backend will receive 9K reads/sec and 1K writes/sec.

Then touch on storage requirements:

- If each post is ~1KB and users post 2x/day → 20M posts/day = ~20GB/day

- For 1 year: ~7.3TB of post data (excluding media files)

Don’t forget media storage: Video thumbnails and images will go to object storage (e.g., S3), not the database.

In the FAANG System Design interview, estimations like these help justify why you’re choosing Redis over Memcached, or why you need to shard your database early. It’s about showing your reasoning. You’re signaling that you’re designing systems for scale, not just correctness.

Step 3: High-Level Architecture

Now that you’ve scoped the problem and estimated the scale, it’s time to translate that thinking into a high-level design. In a FAANG System Design interview, this is where you show you can piece together large-scale, maintainable systems that don’t fall apart under pressure.

Let’s walk through a high-level architecture for a simplified social feed system (Instagram-style). Imagine you’re standing at a whiteboard or using a collaborative tool like CoderPad or Excalidraw.

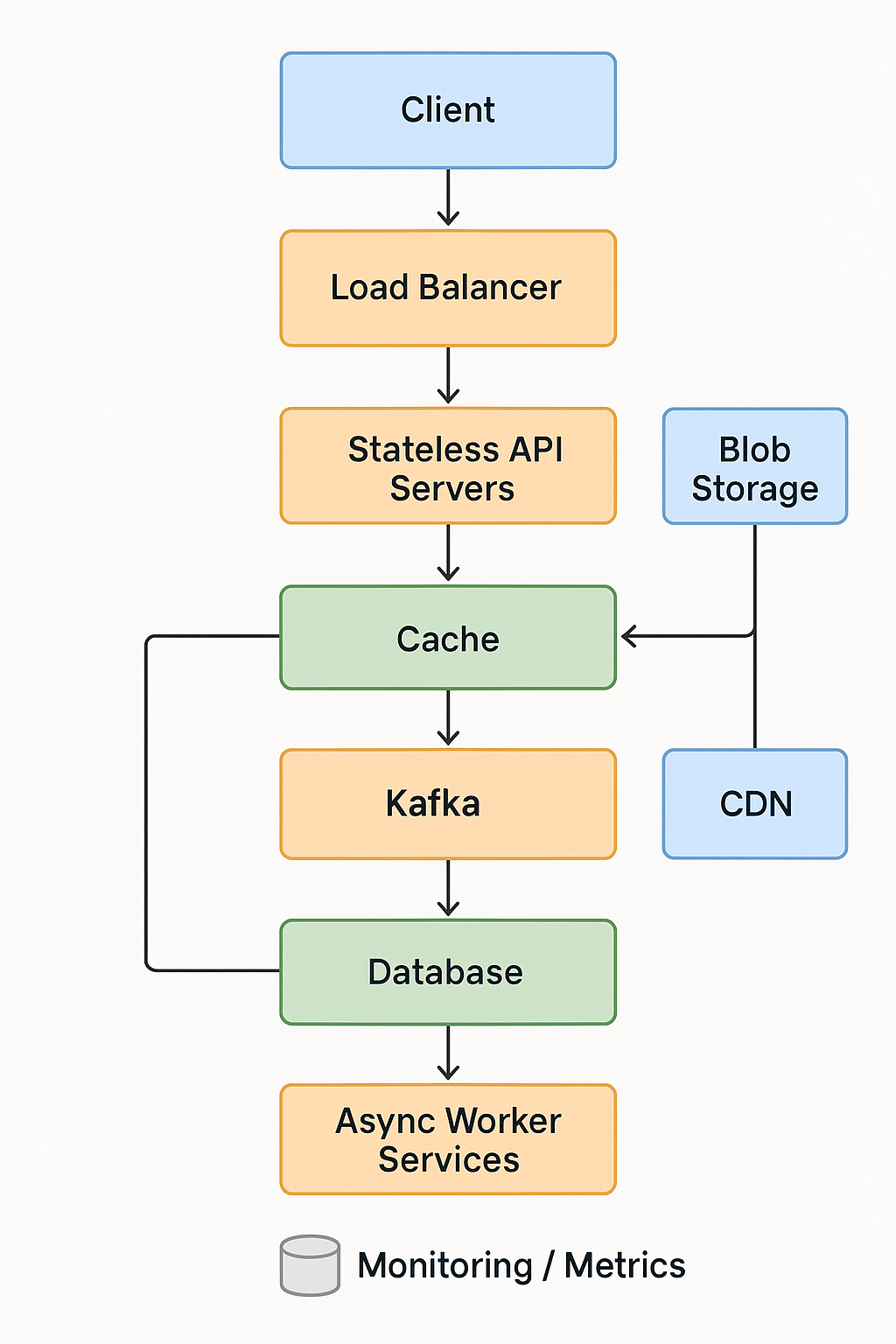

Components:

- Load Balancer: Distributes incoming traffic across multiple application servers to handle scale and ensure availability.

- Stateless API/App Layer: Handles business logic, authenticates users, fetches feeds, and interacts with the database and cache.

- Cache Layer: Use Redis or Memcached to store frequently accessed data like feed content, user sessions, or trending posts.

- Database Layer: A horizontally sharded relational DB or NoSQL system to store user and post data.

- Async Workers & Message Queues: Kafka or SQS for decoupling heavy operations like feed generation, notifications, or analytics.

- Blob Storage: Use S3/GCS for media files (images, videos), decoupled from database storage.

- Optional Layers:

- Search Service: ElasticSearch for user or content search.

- CDN: For delivering static content quickly worldwide.

- Analytics Pipelines: For engagement tracking and personalized ranking.

“Here’s how I’d stitch the flow together for performance and maintainability…”

Walk your interviewer through a request lifecycle:

“When a user opens the app, a request hits the load balancer, routes to the API server, checks Redis for a cached feed, and falls back to the DB if needed. If the feed is stale, we asynchronously rebuild it using a Kafka topic.”

This narration helps interviewers follow your logic, and in the FAANG System Design interview, demonstrating this storytelling skill is just as important as getting the boxes right.

Step 4: API Design and Data Modeling

Once your architecture is in place, you’ll want to define how services interact. In a FAANG System Design interview, good API design and thoughtful data modeling show that you’re not just thinking about infrastructure, but you’re designing for developer usability, scalability, and long-term evolution.

Example API Endpoints:

GET /feed?user_id=xyz&limit=20

POST /posts

GET /users/:id

- Include versioning: GET /v1/feed

- Use query params for filtering and pagination

- REST is widely used, but if the interviewer hints at internal RPC systems, mention gRPC and protocol buffers (especially for high-performance service-to-service calls).

Schema Design

Tables:

- users(user_id PK, name, profile_url, created_at)

- posts(post_id PK, user_id FK, content, media_url, timestamp)

- feeds(user_id PK, post_id, rank_score, timestamp)

Key Design Considerations:

- Sharding Strategy:

- Hash on user_id for evenly distributing traffic

- Range partition by timestamp if time-series access dominates

- Indexes:

- Composite index on (user_id, timestamp) for fast feed fetches

- Denormalization:

- Store post metadata in the feed table for faster read access

In the FAANG System Design interview, you want to clearly communicate trade-offs:

“Denormalizing data improves read latency at the cost of storage and update complexity. Given our 90% read-heavy pattern, this is a worthwhile trade-off.”

Demonstrating this type of reasoning highlights that you’re optimizing not just for data correctness but for system goals like latency and availability, which are key values for any FAANG team.

Step 5: Deep Dive Into a Subsystem

This is your opportunity to shine. After laying out your system’s architecture, the interviewer will usually ask: “Let’s dive deeper into one part, what would you like to explore?” In the FAANG System Design interview, this is where you differentiate yourself from cookie-cutter candidates.

Let’s explore a few strong options depending on the problem:

A. Caching Strategy

What to cache: User sessions, profile data, feed results, or hot content.

Tech: Redis for feed caching (LRU/TTL), CDN (e.g., Cloudflare) for image/video delivery.

Invalidation strategy:

- Evict feed cache after post upload

- Use TTL (e.g., 5 min) to keep content relatively fresh

Write-through vs Write-behind:

- Write-through is safer but adds latency

- Write-behind can reduce load, but risks losing updates on failure

In the FAANG System Design interview, I always recommend clarifying cache freshness requirements. Stale reads are OK in feeds, but not in account balances.

B. Scaling the Database

Vertical Scaling:

- Add more CPU/RAM

- Limited ceiling, single point of failure

Horizontal Scaling:

- Shard by user_id across multiple DBs

- Ensure strong consistency within shards

Replication:

- Leader–follower setup for high availability

- Async replication for read scaling, with potential consistency lag

PACELC & CAP:

- In feed services, prefer availability over consistency (AP systems)

- For write-heavy operations (e.g., payments), lean toward consistency

Show you understand why your architecture leans one way, not just how it works.

C. Asynchronous Processing & Queues

Use case: decouple writes and side effects like notifications, ranking updates, analytics

Queue systems: Kafka (high-throughput), SQS (fully managed)

Push vs Pull:

- Push for immediate fan-out

- Pull when consumers need control (e.g., video transcoding pipeline)

Retries & Dead Letter Queues (DLQs):

- Retain failed events for debugging

- Set retry thresholds to avoid infinite loops

In my own FAANG System Design interview, I walked through how we’d recover from failed fan-out to notification services using exponential backoff and DLQs, and it made a strong impression.

D. Observability

Metrics to Monitor:

- QPS, latency, cache hit ratio, DB query time

- Feed staleness %, post replication lag

Alerting:

- Use Prometheus + Grafana

- Define SLOs (e.g., 95% of feed requests < 200ms)

Resilience Techniques:

- Circuit breakers on downstream failures

- Canary releases for safe deployments

- Rollbacks triggered by error budgets

In the FAANG ecosystem, observability isn’t optional. It’s part of the design from day one.

Step 6: Trade-offs and Design Alternatives

Every strong System Design conversation involves making trade-offs. That’s especially true in a FAANG System Design interview, where you’re expected to balance performance, scalability, complexity, and cost, often with conflicting priorities.

Here’s how I approach this section once the core design is on the board:

SQL vs NoSQL

SQL (e.g., Postgres) is great when:

- You need transactional integrity (e.g., financial data)

- Your data model is relational, and joins are important

NoSQL (e.g., Cassandra, DynamoDB) is better for:

- Massive horizontal scale

- Flexible schemas and high write throughput

For this write-heavy social feed with relaxed consistency needs, I’d lean toward a NoSQL solution that can efficiently handle fan-out and sharded storage.

Push vs Pull Model

- Push model: Precompute feeds when new content is posted (write-heavy)

- Pro: Low latency on reads

- Con: High write cost, harder to update content

- Pull model: Build feed on demand from followers and post data (read-heavy)

- Pro: Flexible, storage-efficient

- Con: Slower reads, more complex logic

In my FAANG System Design interview, I usually suggest a hybrid approach: push for power users and influencers and pull for casual users.

Monolith vs Microservices

- Monoliths: Easier to develop and deploy early

- Microservices: Scale better with independent teams, decoupled failure domains

The trade-off is around operational complexity. FAANG companies start with monoliths and slowly remove services as scaling needs grow.

Global vs Regional Architecture

- Global DB with high latency vs regional replicas for fast reads

- Consider GDPR or data residency needs

The goal in this section isn’t to pick a “correct” answer. It’s to explain your reasoning clearly. In a FAANG System Design interview, strong candidates say things like:

“I’d pick X over Y because of our expected read/write ratio, but if scale or product needs shift, I’d revisit this trade-off.”

That’s how you demonstrate real engineering judgment.

Step 7: Handling Bottlenecks and Scaling Up

Now that you’ve built a solid system and thought through trade-offs, zoom out and ask: What happens when traffic explodes? In a FAANG System Design interview, scaling and resiliency discussions are often what separate good from great candidates.

Here are common bottlenecks and how to handle them:

Cache Overload / Thundering Herd

Problem: Cache goes down, all clients hit DB at once → massive load spike

Solution:

- Use staggered TTLs to avoid synchronized expiry

- Implement request coalescing: only one request rebuilds the cache

- Apply circuit breakers or rate limiting to protect downstream systems

Database Write Contention

Problem: Too many concurrent writes to a shard

Solution:

- Use sharded queues to spread writes across partitions

- Introduce batching and write-through queues

- Scale writes horizontally with eventual consistency

Geo-distribution

Problem: High latency for global users

Solution:

- Deploy read replicas in multiple regions

- Use GeoDNS and CDNs to serve static assets closer to users

- Sync writes asynchronously between regions with conflict resolution

Queue Backpressure

Problem: Slow consumers, unbounded queue growth

Solution:

- Add auto-scaling consumers

- Monitor queue depth, apply timeouts, and DLQs

- Alert on processing lag using observability stack (e.g., Prometheus)

When you walk through failure scenarios in your FAANG System Design interview, frame it like this:

“If our traffic goes from 10K to 100K QPS during a product launch, I’d expect Redis to become a bottleneck. I’d monitor hit ratios and latency spikes, and proactively shard or replicate Redis to handle the surge.”

That level of foresight is exactly what FAANG engineers are expected to bring to the table.

Step 8: Wrap-Up, Edge Cases, and Future Improvements

As you approach the end of your FAANG System Design interview, it’s tempting to stop once the core design is complete. Don’t. Use the last few minutes to leave a strong impression by tying everything together and showing you can think ahead.

Here’s my checklist:

Recap the Design

“We built a horizontally scalable feed system that handles 10K QPS with a hybrid push-pull model, Redis for caching, Kafka for async fan-out, and S3 for media. Sharding by user_id ensures even load, and TTL policies keep content fresh.”

Edge Cases to Call Out

- Content moderation: queue for review, or auto-flag rules

- Spam prevention: rate limiting by IP/user/device

- Feed ranking: future ML pipeline for engagement-based ordering

- Data deletion: GDPR-compliant erase requests

Future Improvements

- Add observability dashboards

- Explore ML-based feed ranking

- Enable blue-green deployments for safer releases

- Scale to multi-region architecture for global users

This part of the FAANG System Design interview is your closing pitch. It shows you’re not just building for now. You’re building for next quarter, next year, and the next billion users.

Common FAANG System Design Interview Questions and Sample Answers

When preparing for a FAANG system design interview, you’ll often face follow-up questions that probe your reasoning, depth, scalability mindset, and your ability to balance performance, availability, and simplicity. The best candidates not only walk through strong designs, but also field questions like senior engineers defending architectural decisions during design reviews.

Below are the most common FAANG system design interview questions, along with high-quality sample answers that reflect the voice of an experienced engineer.

1. How would you design a scalable news feed like Facebook or Instagram?

What they’re testing: Trade-offs between push and pull models, caching, and scalability under read-heavy workloads.

Sample Answer:

“I’d use a hybrid approach:

- Push model for high-fanout users (e.g., celebrities), precomputing their followers’ feeds on post creation.

- Pull model for low-activity users—fetching posts on demand.

We’d store feed entries in a denormalized feed table keyed by user, and cache the top 50 posts in Redis with TTL.

On the backend, writes would go through Kafka for fan-out processing. We’d shard the database by user ID and horizontally scale read replicas to serve high QPS.

Caching and prefetching would ensure we meet our sub-second latency SLO.”

2. How do you decide between SQL and NoSQL databases for your design?

What they’re testing: Data modeling maturity, consistency needs, scaling trade-offs.

Sample Answer:

“If the system has strong relational integrity needs, like transactions, joins, or constraints, I’d lean SQL (Postgres, MySQL).

But for high-write throughput, flexible schemas, and horizontal scale, I’d go NoSQL (Cassandra, DynamoDB).

For example, in a social app:

- User and post data → SQL

- Feed events and logs → NoSQL

In the FAANG system design interview, I’d explicitly explain the trade-off between ACID compliance and eventual consistency, and match that to the product’s latency or consistency goals.”

3. How would you handle a sudden spike in traffic, 10× in a single day?

What they’re testing: Resilience, autoscaling, bottleneck awareness.

Sample Answer:

“First, I’d identify potential chokepoints:

- DB write saturation

- Cache misses flooding the origin

- Load balancer queue depth

To mitigate:

- Use autoscaling for stateless app servers

- Add circuit breakers on the cache layer

- Employ exponential backoff and rate limiting at the gateway

I’d also pre-warm caches and ensure high-read endpoints have CDN support where possible.”

4. How do you ensure high availability in your system?

What they’re testing: Fault tolerance design, regional replication, failover strategy.

Sample Answer:

“I’d aim for N+1 redundancy across all critical components.

- Multiple load balancers (with health checks)

- Replica sets for databases with auto failover

- Redundant queues (Kafka + backup consumers)

For global services, I’d use active-active regions with traffic split by GeoDNS, and fallback to read-only mode if write quorum is unavailable.

Monitoring, alerting, and synthetic probes would help detect outages early.”

5. What’s your approach to designing APIs for high-QPS systems?

What they’re testing: API idempotency, pagination, statelessness, performance.

Sample Answer:

“I’d keep APIs stateless and idempotent. For example:

- Use PUT instead of POST where possible

- Use cursor-based pagination for large result sets

- Minimize payload size (send only what the client needs)

For high-QPS endpoints, I’d implement read-through caching, limit optional joins, and use async processing for side effects like logging or analytics.”

6. Explain how you’d design a distributed rate limiter.

What they’re testing: Coordination across servers, consistency, performance.

Sample Answer:

“I’d use a token bucket algorithm stored in a shared Redis cluster.

Each API request would check and decrement a user-scoped token bucket.

To reduce hotkey issues, I’d hash keys and spread across Redis shards.

For global coordination across data centers, I’d layer in eventual-consistency-friendly algorithms like sliding window counters or use CDN edge rate-limiting where supported.”

7. Design a URL shortening service like bit.ly.

What they’re testing: High-read scalability, key generation strategy, data modeling.

Sample Answer:

“This is a read-heavy system. I’d:

- Use base62 or hash-based shortcodes

- Store mappings in a key-value store like DynamoDB

- Cache top links in Redis with TTL

I’d support analytics via a side channel: log click events to Kafka and process asynchronously into a data warehouse.

For high availability, I’d replicate data across regions and load balance requests with consistent hashing.”

8. What’s the difference between horizontal and vertical scaling? Which would you choose and when?

What they’re testing: Scalability intuition and cloud-native mindset.

Sample Answer:

“Vertical scaling means adding more CPU, RAM, or SSD to a single instance—it’s simpler but has diminishing returns and hardware limits.

Horizontal scaling means adding more nodes or replicas. It’s harder to orchestrate but theoretically offers infinite scale.

I’d always start vertically during MVP for simplicity, but switch to horizontal once I see traffic bottlenecks or want resilience via replication.”

9. How would you design a feature flag system used across multiple services?

What they’re testing: Distributed state, consistency, availability.

Sample Answer:

“I’d build a feature flag service with:

- A central config store (e.g., etcd or Redis)

- SDK clients with local caching and TTL

- Polling or push via pub-sub (Kafka/WebSockets) to propagate changes

Feature rules would support user segments, AB testing, or rollout % thresholds.

Services would fail open with stale config in case of flag server outage.”

10. How would you scale your database in a growing system?

What they’re testing: Partitioning, replication, indexing, storage strategy.

Sample Answer:

“I’d use read replicas to offload traffic, and partition (shard) the data by user ID or tenant.

Write-heavy tables (like logs or messages) would get their own partitions.

I’d also monitor slow queries and add compound indexes as needed.

For massive growth, I’d move to distributed stores like Spanner or Cassandra with consistent hashing, and enable multi-region replication.”

Final Takeaways & Prep Strategy

Let’s wrap this up with a few concrete pointers. The FAANG System Design interview rewards structured thinking, curiosity, and the ability to explain complex ideas simply.

Here’s how to build those skills:

Practice with Real Prompts

- “Design WhatsApp messaging”

- “Design YouTube video streaming”

- “Design Google Docs collaboration”

- “Design Netflix content recommendation”

Use the framework from this guide for each one: Clarify → Estimate → Design → Deep Dive → Trade-offs → Resilience → Wrap-Up

Mock Interviews Are Gold

Use platforms like MockInterviews.dev, Pramp, or peer Slack/Discord groups. Practicing aloud helps internalize your framework and refine your communication style.

Build Real Systems

Nothing beats experience. Try side projects or contribute to open-source projects with real architecture. Seeing how systems evolve over time gives you authentic examples to draw from during your FAANG System Design interview.

Create Your Playbook

Keep a personal doc with:

- Common patterns (e.g., pub-sub, sharding, CQRS)

- Reference diagrams

- Trade-off cheat sheets

This becomes your pre-interview warm-up kit.

Final Word

The FAANG System Design interview is tough, but it’s also learnable. You don’t need to memorize a hundred systems. You need to practice breaking problems down, estimating scale, choosing components wisely, and defending your decisions.

If you’ve made it this far through the guide, you’re already ahead of the curve.