Design a System to Interview Candidates: System Design interview guide

When an interviewer asks you to design a system to interview candidates, they are not testing your knowledge of hiring processes or interview questions. They are evaluating your ability to design a scalable, reliable, and fair system that coordinates people, data, and workflows under real-world constraints.

This system sits at the intersection of scheduling, communication, evaluation, and decision-making. In a System Design interview, the goal is to demonstrate how you handle complexity, human-in-the-loop workflows, and non-functional requirements like fairness, auditability, and reliability. Interviewers want to see whether you can design infrastructure that supports hiring at scale without breaking down operationally or ethically.

Clarifying requirements and assumptions upfront

Interview systems are deceptively complex because they involve humans rather than purely automated services. Clarifying requirements early prevents incorrect assumptions about scale, workflows, and constraints. In interviews, this step shows that you understand how system behavior changes based on hiring volume, company size, and interview structure.

A startup hiring a few engineers per quarter needs a very different system from a global company hiring thousands of candidates across regions and roles. Without establishing this context, architectural decisions lose relevance.

Defining the scope of the interview system

One of the first clarifications is whether the system handles the entire hiring pipeline or only the interview phase. Some systems manage applications, screening, interviews, and offers, while others integrate with existing applicant tracking systems and focus only on interview coordination and evaluation.

You should also clarify whether interviews are synchronous, asynchronous, or hybrid. Live video interviews, coding exercises, take-home assessments, and recorded interviews all impose different system requirements. These choices affect storage, execution, scheduling, and data retention.

Functional requirements to establish early

At a functional level, the system must manage candidate profiles, interviewer profiles, interview sessions, and feedback. It should support creating interview loops, assigning interviewers, scheduling sessions, collecting structured evaluations, and aggregating results for decision-making.

Clarifying whether interviewers are internal employees, external contractors, or automated systems helps define access control and workflow complexity. You should also determine how decisions are made and whether the system enforces hiring rules or only provides recommendations.

Non-functional requirements that shape the design

Non-functional requirements play an outsized role in this problem. Reliability is critical because missed or broken interviews directly affect candidate experience. Scalability matters during peak hiring periods. Latency constraints influence scheduling responsiveness and interview startup times.

Fairness and auditability are especially important. The system may need to support anonymized reviews, structured feedback, and historical audit logs to meet compliance and internal policy requirements. Explicitly calling out these concerns demonstrates senior-level thinking.

Making reasonable assumptions under ambiguity

Interviewers may intentionally leave details vague. In that case, it is acceptable to assume a medium-to-large organization hiring across multiple roles and regions with a mix of technical and non-technical interviews. Clearly stating assumptions before proceeding allows the interviewer to correct course if needed and signals confidence in navigating uncertainty.

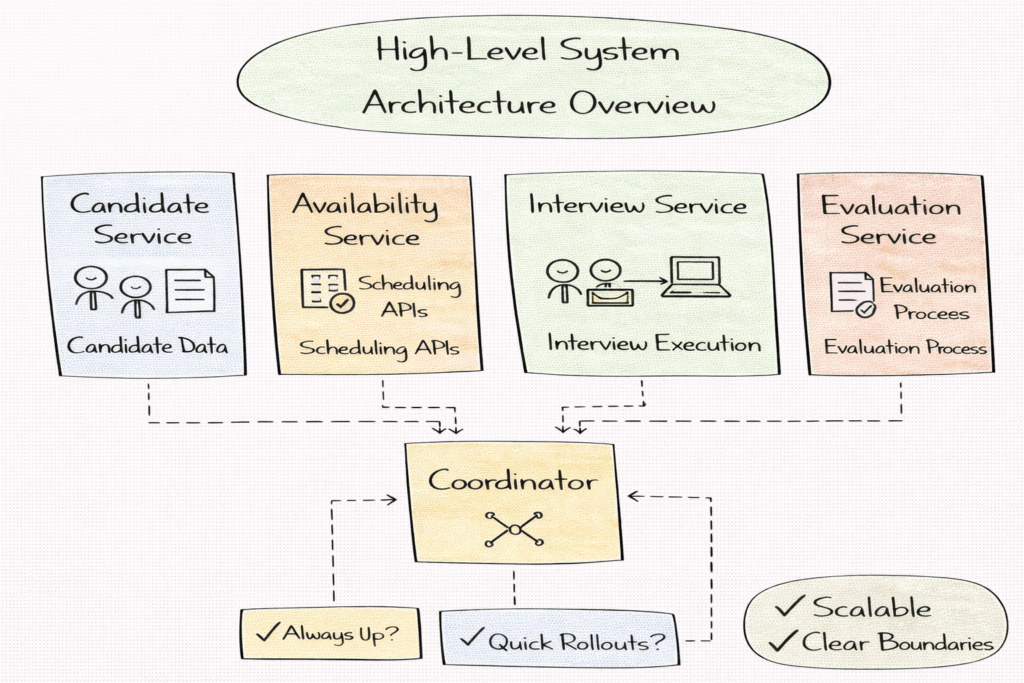

High-level system architecture overview

Before discussing individual services, it is important to outline the overall shape of the interview system. At a high level, the system coordinates candidate data, interviewer availability, interview execution, and evaluation workflows. Separating these concerns makes the system easier to scale and reason about.

In interviews, starting with a high-level architecture helps the interviewer follow your thinking and gives you a framework to anchor deeper discussions later.

Control plane versus execution plane

A useful way to structure the architecture is to separate decision-making from execution. The control plane manages interview workflows, scheduling logic, state transitions, and policy enforcement. It determines who should be interviewed, when sessions occur, and how feedback is collected and aggregated.

The execution plane handles the actual interview interactions. This includes video sessions, coding environments, assessment delivery, and real-time communication. Decoupling execution from orchestration allows the system to scale interview volume without overloading core workflow logic.

Core data flow through the system

The system begins with candidate intake, either through direct applications or integrations with external hiring platforms. Candidate profiles are created and enriched as they move through interview stages. Interviewers interact with the system through scheduling interfaces and evaluation tools.

During interviews, execution services collect session data and feedback. This information flows back to the control plane, where it is stored, processed, and surfaced for hiring decisions. Clearly describing this flow demonstrates an understanding of state transitions and data ownership.

Designing for modularity and extensibility

Interview systems evolve frequently as hiring practices change. A modular architecture allows new interview formats, evaluation criteria, or scheduling rules to be added without rewriting the entire system. In interviews, highlighting extensibility shows that you are designing for long-term use rather than a one-off solution.

This high-level architecture sets the foundation for deeper discussions about components, workflows, scaling, and fairness in later sections.

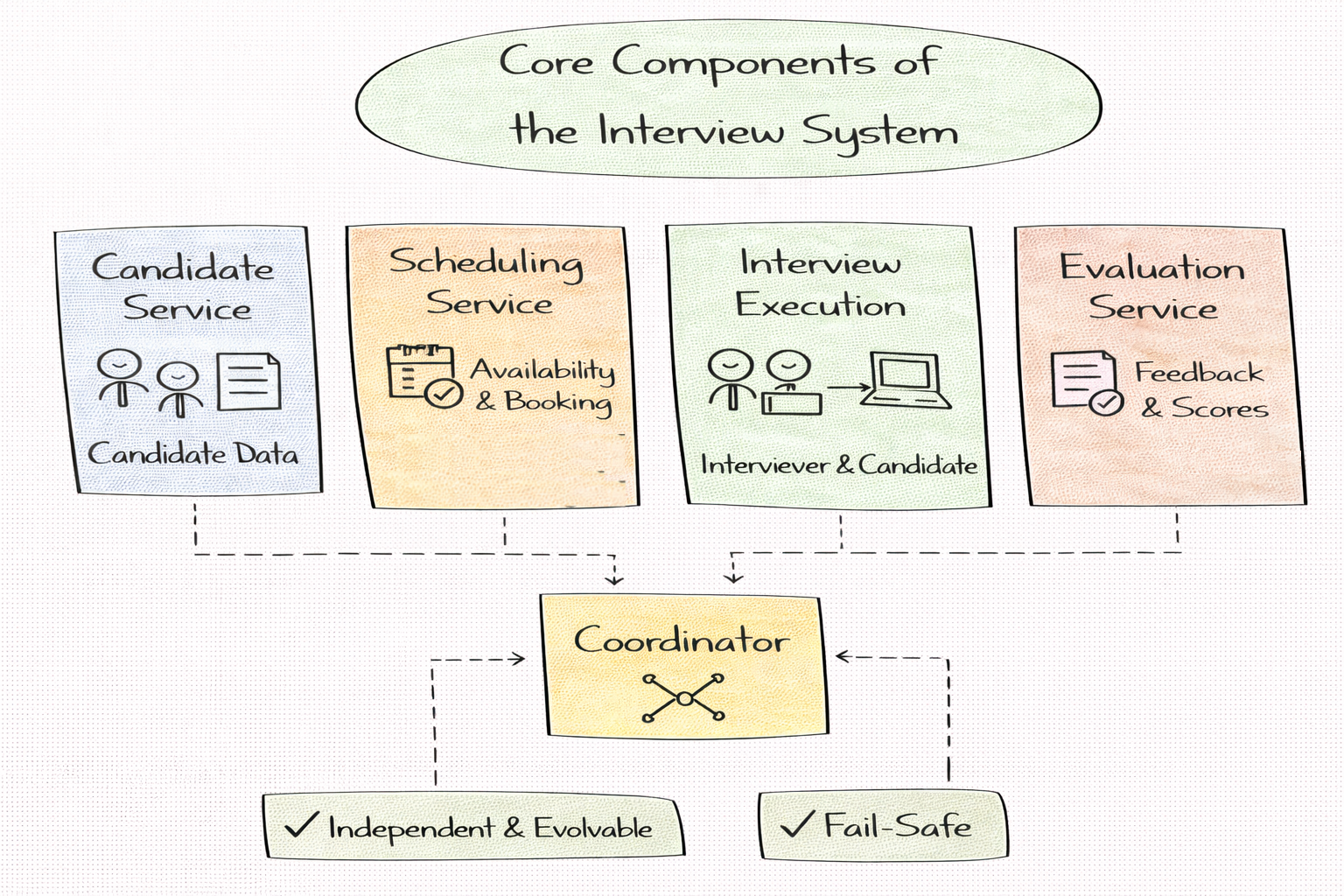

Core components of the interview system

A strong interview system is composed of modular components with clearly defined responsibilities. In System Design interviews, candidates often fail this section by merging scheduling, evaluation, and execution logic into a single service. Separating concerns improves scalability, simplifies maintenance, and reduces the risk of cascading failures.

The interview system should act as a coordination platform rather than a monolithic application. Each component should be independently scalable and evolvable as hiring practices change.

Candidate profile and application management

This component stores candidate information, interview stage progression, and historical interactions. It acts as the source of truth for where a candidate is in the interview pipeline. The system should support updates over time, such as new interviews, rescheduling events, or feedback revisions.

In interviews, it is important to emphasize data consistency and privacy. Candidate data must be protected while remaining accessible to authorized interviewers and recruiters.

Interviewer management and availability tracking

The interviewer management component tracks interviewer profiles, roles, expertise, and availability. This includes calendar integration, time zone awareness, and workload distribution. The system should avoid overloading specific interviewers while ensuring that interviews are staffed by qualified participants.

Explaining how the system balances interviewer load demonstrates awareness of operational constraints and human factors.

Interview session orchestration

This component coordinates the lifecycle of an interview session. It schedules sessions, sends notifications, provisions interview resources, and tracks session status. It must handle changes gracefully, including cancellations and rescheduling.

In a System Design interview, this is where you can discuss state machines and workflow engines without diving into low-level implementation.

Evaluation and feedback aggregation

After interviews, structured feedback must be collected and aggregated. This component ensures that feedback is standardized, time-bound, and auditable. It may also enforce submission deadlines to prevent bias caused by delayed feedback.

Interviewers often look for explicit discussion of how feedback is stored, accessed, and protected from premature influence.

Interview workflows and candidate lifecycle

The candidate lifecycle represents the sequence of states a candidate passes through, from application to final decision. Modeling this lifecycle explicitly helps the system enforce consistency and prevent candidates from skipping required steps.

In interviews, explaining this as a state-driven workflow shows clarity and control over complex processes.

Screening and early-stage interviews

Early stages often involve automated screening, recruiter calls, or basic assessments. The system should support lightweight interactions at this stage while collecting enough data to make informed decisions about progression.

Discussing how early-stage filtering reduces downstream load demonstrates systems thinking beyond individual features.

Multi-round and loop-based interviews

Later stages typically involve multiple interviews conducted by different interviewers. The system must coordinate these sessions, manage dependencies, and ensure all required feedback is collected before a decision is made.

Interviewers often probe how you prevent incomplete loops or missing evaluations. Explaining enforcement mechanisms shows attention to process integrity.

Decision-making and candidate progression

Once interviews are complete, the system aggregates feedback and surfaces it for hiring decisions. It should support different decision models, such as unanimous agreement, majority votes, or hiring committee reviews.

Highlighting flexibility in decision workflows demonstrates that the system can adapt to different organizational policies.

Scheduling, coordination, and conflict handling

Scheduling is one of the most challenging aspects of interview systems. It involves coordinating multiple calendars, time zones, and availability constraints while minimizing delays and rescheduling.

In interviews, acknowledging scheduling complexity early shows realism and practical experience.

Availability modeling and time zone handling

The system must model interviewer and candidate availability accurately. Time zone normalization is critical to avoid scheduling errors. Availability should be represented in a way that supports quick conflict detection and resolution.

Explaining how availability is abstracted rather than hardcoded demonstrates architectural thinking.

Conflict detection and resolution strategies

Conflicts arise when availability changes or when interviews overlap. The system should detect conflicts early and provide resolution paths such as alternative time slots or interviewer substitutions.

In a System Design interview, discussing how the system handles last-minute changes helps show robustness under real-world conditions.

Rescheduling and cancellation handling

Rescheduling should be treated as a first-class operation rather than an exception. The system must update the state consistently, notify participants, and ensure that interview loops remain valid.

Interviewers often value candidates who design rescheduling flows with minimal candidate disruption and clear state transitions.

Scaling the interview system

Scaling an interview system is not driven by user traffic in the traditional sense, but by the coordination of people, schedules, and workflows. As hiring volume grows, the system must handle more candidates, more interviewers, and more concurrent interview sessions. These dimensions scale independently and can stress different parts of the system at different times.

In interviews, explaining what actually needs to scale shows that you understand the operational reality of hiring platforms.

Horizontal scaling of workflow orchestration

The orchestration layer that manages candidate progression and interview workflows must scale horizontally. Stateless workflow engines backed by a shared state store allow multiple instances to process candidate transitions concurrently. Care must be taken to ensure consistency when multiple actions occur simultaneously, such as feedback submissions and scheduling updates.

Describing how concurrency is handled demonstrates comfort with distributed systems concepts.

Isolating workloads and peak hiring periods

Hiring is often seasonal, with bursts during specific months or events. The system should isolate workloads by role, team, or region to prevent one hiring surge from affecting others. Queue-based processing allows the system to absorb spikes without overwhelming downstream services.

This approach also makes it easier to apply throttling and prioritization when resources are constrained.

Supporting global hiring and regional distribution

For global organizations, the interview system must support candidates and interviewers across regions. This includes data locality considerations, region-aware scheduling, and localized compliance requirements. Designing regional isolation boundaries helps reduce latency and limits the impact of regional failures.

Reliability, fault tolerance, and failure handling

Interview systems must handle both technical failures and human-driven disruptions. Interviewers may miss sessions, candidates may disconnect, and services may experience outages. A resilient system anticipates these scenarios and provides recovery paths.

In interviews, this section is where you show that you design systems for imperfect conditions.

Handling interview session failures

If an interview session fails due to connectivity issues or platform errors, the system should detect the failure, record the partial state, and allow rescheduling without losing context. This includes preserving notes, session logs, and previously submitted feedback.

Explaining how the system avoids forcing candidates to restart entire interview loops demonstrates empathy and robustness.

Ensuring data consistency during partial failures

Failures often occur mid-workflow. The system must ensure that candidate state, scheduling data, and feedback remain consistent even if some operations fail. Durable state storage and transactional updates help prevent corrupted or ambiguous states.

Interviewers often probe how you avoid duplicate interviews or missing feedback under failure conditions.

Monitoring, alerting, and recovery workflows

Reliable systems require visibility. Monitoring interview success rates, scheduling delays, and feedback completion helps detect systemic issues early. Alerting mechanisms allow recruiters or operators to intervene before candidates are negatively impacted.

Discussing observability signals that you consider operability, not just architecture.

Fairness, bias mitigation, and auditability

Fairness in interviews cannot rely solely on human judgment. The system itself influences outcomes through scheduling, interviewer assignment, and feedback presentation. Recognizing this elevates your answer beyond purely technical concerns.

Interviewers often value candidates who explicitly call out ethical and compliance considerations.

Structured feedback and standardized evaluations

Structured evaluation forms reduce variability and bias in interviewer feedback. The system should enforce consistent criteria and prevent feedback visibility until all interviewers have submitted their evaluations. This helps maintain independent judgment.

Explaining how the system enforces structure without over-constraining interviewers demonstrates balance.

Anonymization and information control

In some stages, anonymizing candidate information can reduce unconscious bias. The system may hide certain attributes or delay their visibility until later stages. Designing controlled information access shows awareness of fairness-enhancing techniques.

This is particularly relevant for large organizations with formal hiring policies.

Audit logs and compliance requirements

Every action in the interview system should be auditable. This includes scheduling changes, interviewer assignments, feedback submissions, and final decisions. Audit logs support internal reviews, compliance audits, and dispute resolution.

Highlighting auditability reinforces that this system supports business-critical decisions.

Trade-offs, constraints, and interview wrap-up

Automation versus human judgment

One of the key trade-offs in interview systems is how much to automate. Automation improves consistency and scale but can remove flexibility. Human judgment allows nuance but introduces variability.

In interviews, articulating this trade-off shows that you understand the limits of automation in human-centered systems.

Speed versus quality of hiring

Faster hiring reduces candidate drop-off but can compromise evaluation depth. Slower, more thorough processes improve signal quality but risk losing candidates. The System Design should allow organizations to tune this balance based on hiring goals.

Discussing configurability demonstrates thoughtful design.

Customization versus standardization

Different teams and roles often require different interview processes. Excessive customization increases system complexity, while rigid standardization limits usefulness. A modular workflow system allows controlled variation without fragmentation.

This trade-off frequently resonates with interviewers who have seen both extremes in practice.

Managing time constraints in interviews

In a time-limited interview, it is important to prioritize architecture, workflows, and fairness considerations. Deep dives into UI or HR policy are less important than demonstrating system-level thinking.

Mentioning how you would adjust your explanation under time pressure shows interview awareness.

Using structured prep resources effectively

Use Grokking the System Design Interview on Educative to learn curated patterns and practice full System Design problems step by step. It’s one of the most effective resources for building repeatable System Design intuition.

You can also choose the best System Design study material based on your experience:

Final thoughts:

Designing a system to interview candidates tests more than technical knowledge. It evaluates your ability to reason about complex workflows, human behavior, and ethical constraints at scale. Interviewers look for clarity, structure, and an understanding of real-world hiring challenges.

If you approach the problem methodically, clarify assumptions, and explicitly address fairness, reliability, and trade-offs, you demonstrate readiness to design systems that support critical business decisions. That combination of technical depth and thoughtful judgment is what makes an answer stand out in System Design interviews.