System Design Examples: How to Approach and Solve Interview Questions Effectively

System Design examples are the primary way interviewers assess whether a candidate can think at the system level. Unlike theoretical questions, examples force candidates to make decisions, justify trade-offs, and adapt to incomplete information. This mirrors real engineering work far more closely than isolated concept questions.

Interviewers intentionally choose examples that appear familiar or simple on the surface. The goal is not to trick candidates, but to observe how they reason when the problem space is open-ended. Two candidates can work through the same example and receive very different evaluations based on how they structure and explain their thinking.

Why examples reveal more than concept questions

Conceptual knowledge can often be memorized. System Design examples cannot be solved effectively through memorization alone because interviewers actively change constraints, introduce failures, or probe alternative approaches. Candidates who rely on memorized architectures struggle when the conversation deviates from their prepared path.

Examples reveal how candidates respond to uncertainty. Interviewers observe whether candidates remain calm, whether they can reason aloud, and whether they can explain trade-offs clearly. These signals are far more predictive of real-world performance than familiarity with specific technologies.

The role of examples in senior-level evaluation

As the interview level increases, System Design examples become more important. For senior roles, examples are often the deciding factor. Interviewers expect candidates to demonstrate judgment, restraint, and clarity rather than encyclopedic knowledge.

Strong candidates use examples to show how they think, not how much they know. This is why practicing System Design examples is essential for interview success.

How interviewers evaluate System Design examples

One of the most common misconceptions is that interviewers are looking for a correct or optimal design. In reality, interviewers are evaluating the reasoning process. They want to understand how candidates arrive at decisions and how they respond when those decisions are questioned.

A design that is simple, well-justified, and adaptable is often rated higher than a complex design that lacks clear reasoning. Interviewers care deeply about whether candidates can explain why a choice was made and what trade-offs were accepted.

Signals interviewers listen for during examples

Interviewers listen closely to how candidates structure their explanations. They notice whether candidates start by clarifying requirements, whether they introduce a high-level design before diving into details, and whether they acknowledge uncertainty when appropriate.

They also pay attention to communication style. Clear, simple explanations signal true understanding, while dense terminology often signals shallow familiarity.

| Interview signal | What it indicates |

| Clear structure | Organized thinking |

| Trade-off discussion | Engineering judgment |

| Early failure awareness | Real-world experience |

| Calm adaptation | Senior-level maturity |

These signals often outweigh the specifics of the architecture itself.

Why follow-up questions matter more than the initial answer

Interviewers use follow-up questions to explore depth. They may change scale assumptions, introduce failure scenarios, or ask about alternatives. Strong candidates treat these questions as extensions of the same problem rather than disruptions.

How a candidate adapts under follow-up pressure is often more important than their initial design. Interviewers see follow-ups as opportunities to validate reasoning, not as attempts to invalidate answers.

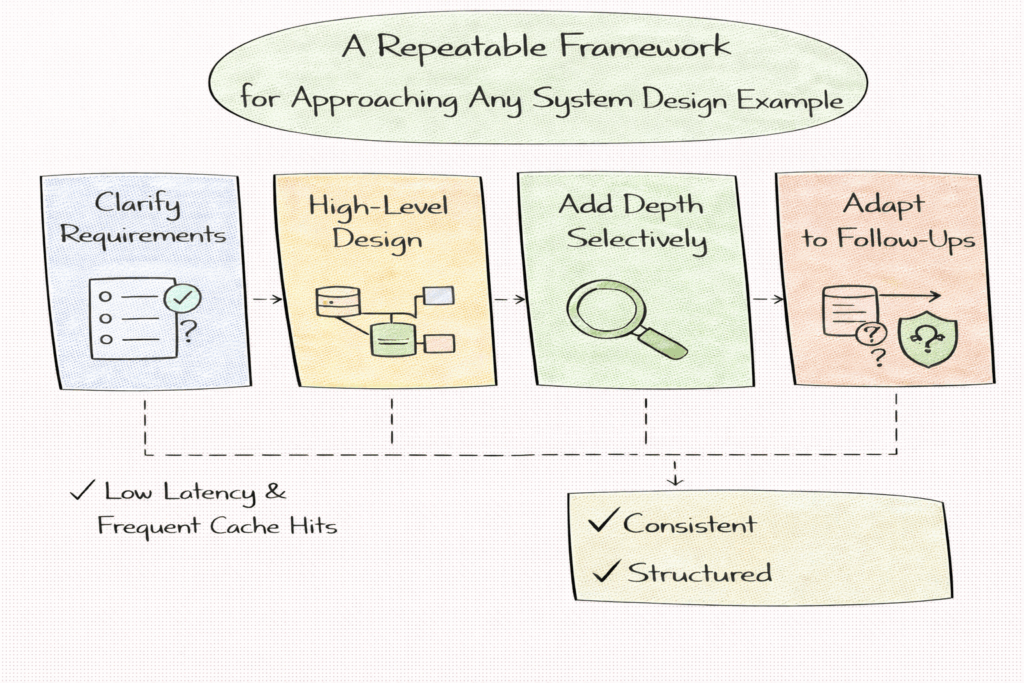

A repeatable framework for approaching any System Design example

System Design examples vary widely in domain, but the approach should remain consistent. A repeatable framework reduces cognitive load and prevents panic when the problem feels unfamiliar. Interviewers expect experienced candidates to follow a recognizable flow, even if they never explicitly name it.

Candidates who approach every example differently often appear scattered or reactive. Candidates who use a consistent structure appear calm and deliberate, even when solving a new problem.

Starting with clarification and framing

Every System Design example begins with understanding the problem. Before proposing any architecture, strong candidates clarify what the system must do and what constraints matter most. This includes scale expectations, correctness requirements, and operational assumptions.

Framing the problem in your own words ensures alignment with the interviewer and demonstrates intentional thinking. It also gives you a reference point to return to later if the conversation becomes complex.

Progressing from high-level design to depth

After clarification, strong candidates move to a high-level design. This establishes a shared mental model of the system before diving into specific components. From there, depth is added selectively in areas that matter most for the given example.

This progression mirrors how real systems are designed and how interviewers expect candidates to reason. Jumping into details too early is a common mistake that leads to confusion and over-design.

Treating the example as a collaborative discussion

System Design examples are conversations, not monologues. Interviewers guide depth through questions and constraints. A structured approach allows candidates to adapt without losing coherence.

Strong candidates anchor follow-up discussions to earlier decisions, explaining how changes propagate through the design rather than starting over. This ability to reason incrementally is a key interview signal.

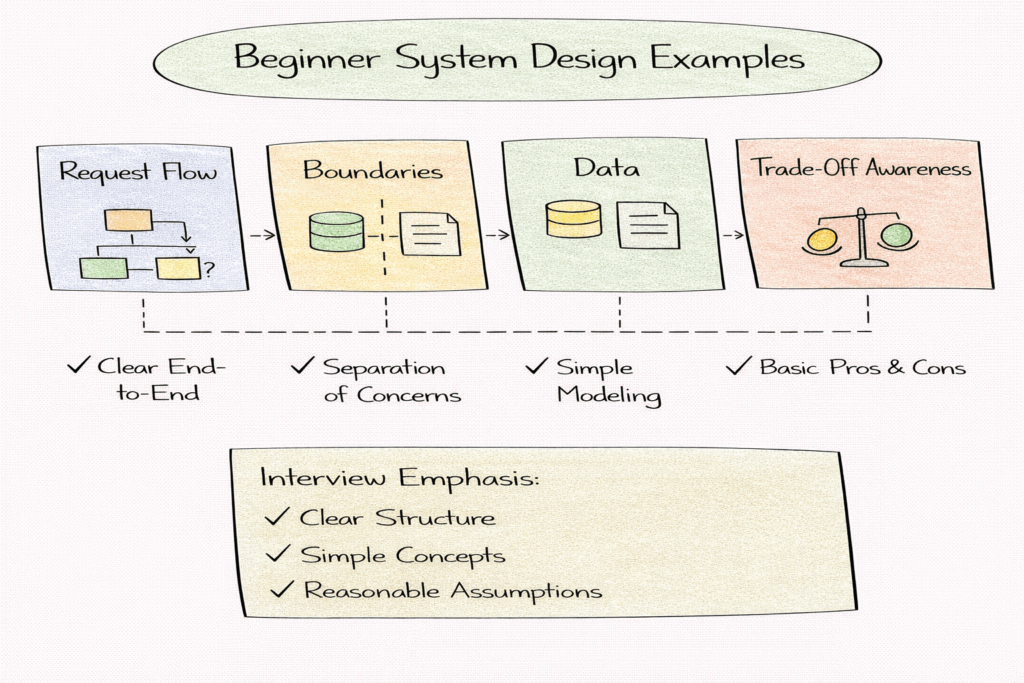

Beginner System Design examples and foundational thinking

Beginner System Design examples are used as an early filter. These examples test foundational thinking rather than scale or distributed complexity. Interviewers want to see whether candidates can explain request flow, define boundaries, and model simple data correctly.

Candidates who struggle with beginner examples often struggle later, regardless of how advanced their knowledge appears. This is why interviewers take these examples seriously.

What interviewers expect at the beginner level

At this stage, interviewers are primarily evaluating structure and communication. They expect candidates to describe how a request moves through the system, identify core components, and explain why those components exist.

Optimization is not the goal. Over-designing a beginner example often signals poor judgment rather than ambition.

| Focus area | What interviewers evaluate |

| Request flow | End-to-end clarity |

| Boundaries | Separation of concerns |

| Data | Simple, correct modeling |

| Trade-offs | Awareness, not depth |

Typical characteristics of beginner examples

Beginner examples usually involve a small number of operations and modest scale assumptions. The system may be read-heavy or write-heavy, but complexity is intentionally limited. Examples such as URL shorteners, basic file storage, or simple rate limiters fall into this category.

While the problem domain may change, the expected reasoning remains consistent. Interviewers want to see clarity, not cleverness.

Why beginner examples set the tone for the interview

The way a candidate handles a beginner example often sets the tone for the entire interview. Clear structure, calm explanation, and reasonable assumptions build the interviewer’s confidence early. That confidence often leads to more collaborative and exploratory follow-up questions later.

Strong candidates treat beginner examples as opportunities to demonstrate fundamentals rather than rushing to advanced topics.

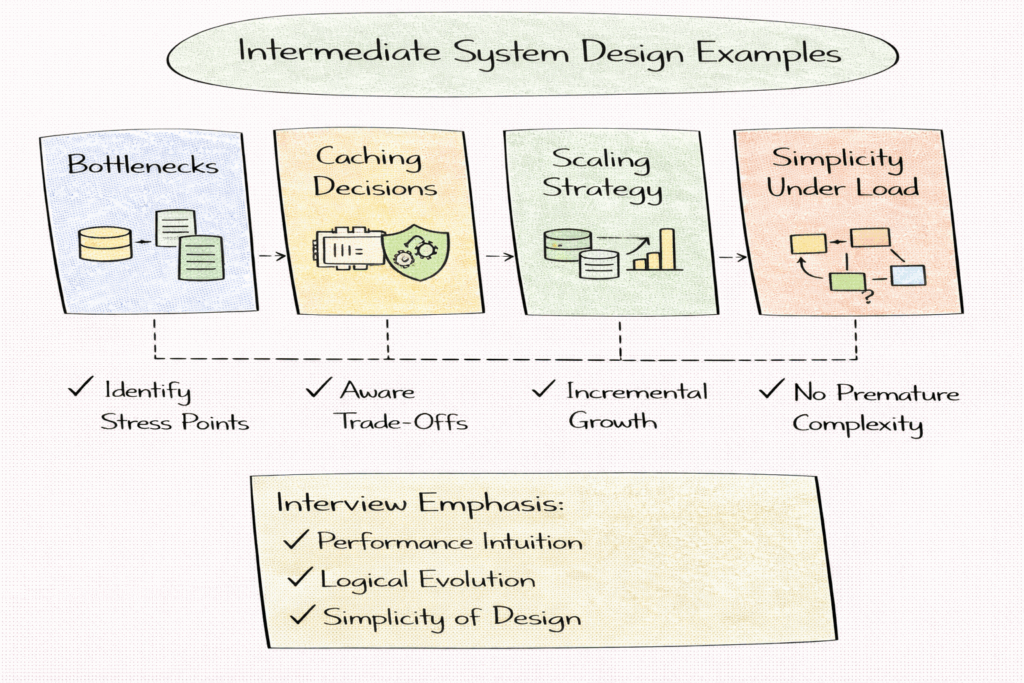

Intermediate System Design examples and scalability intuition

Intermediate System Design examples introduce scale as a meaningful constraint. At this stage, the system must continue to behave predictably as traffic grows, which forces candidates to think beyond basic correctness. Interviewers use these examples to test whether candidates can reason about performance, bottlenecks, and gradual system evolution.

The core shift at this level is moving from “does this work?” to “how does this behave under load?”

What interviewers expect in intermediate examples

Interviewers expect candidates to identify bottlenecks before proposing solutions. Rather than immediately adding caches, queues, or sharding, strong candidates explain which component becomes stressed first and why. This demonstrates performance intuition rather than solution memorization.

Candidates are also expected to explain how the system would scale incrementally. Interviewers want to hear how a simple design evolves over time, not how a large-scale architecture appears fully formed from the start.

| Design dimension | What interviewers evaluate |

| Bottleneck analysis | Performance intuition |

| Caching decisions | Trade-off awareness |

| Scaling strategy | Long-term thinking |

| Simplicity under load | Engineering judgment |

Typical intermediate System Design examples

Intermediate examples often involve systems such as news feeds, notification services, search autocomplete, or media storage platforms. These systems tend to be read-heavy or write-heavy and expose candidates to real-world performance trade-offs without deep distributed systems complexity.

The specific example matters less than the reasoning process. Interviewers look for candidates who can articulate why certain optimizations are necessary and what risks they introduce.

Common pitfalls at the intermediate level

A common mistake at this stage is introducing complexity too early. Candidates sometimes over-design by assuming massive scale without justification. Interviewers prefer designs that start simple and evolve logically as constraints tighten.

Strong candidates show restraint and explain when complexity becomes necessary.

Advanced System Design examples and distributed systems depth

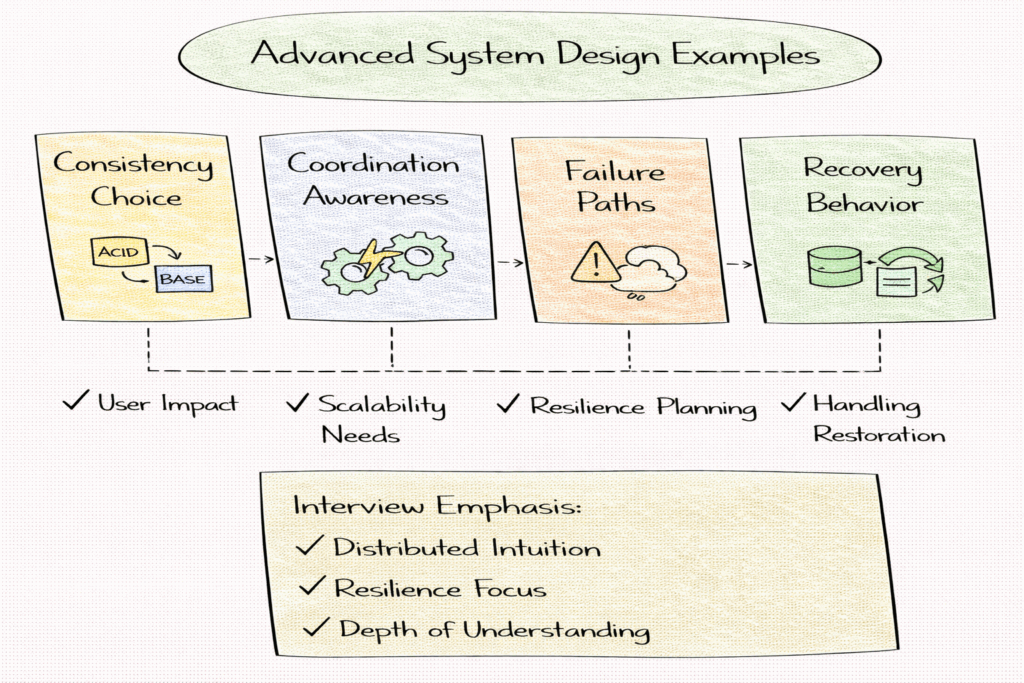

Advanced System Design examples test the understanding of distributed systems behavior. These problems involve multiple services, coordination challenges, consistency trade-offs, and failure scenarios that cannot be ignored. Interviewers use these examples to distinguish strong mid-level candidates from senior-level engineers.

At this level, ideal assumptions break down. Partial failures, network delays, and inconsistent states become central to the discussion.

What interviewers probe in advanced examples

Interviewers expect candidates to reason about coordination costs, consistency guarantees, and failure handling without relying on perfect conditions. They listen for whether candidates design systems that degrade gracefully rather than collapse when something goes wrong.

Answers that assume reliable networks, instantaneous communication, or global ordering are quickly challenged.

| Distributed concern | What it reveals |

| Consistency choice | User-impact reasoning |

| Coordination awareness | Scalability maturity |

| Failure paths | Resilience thinking |

| Recovery behavior | Operational experience |

Typical advanced System Design examples

Advanced examples often include messaging systems, distributed schedulers, coordination services, or globally replicated data stores. These systems force candidates to confront trade-offs between availability, consistency, and performance directly.

Interviewers are not looking for formal proofs or protocol details. They want to see whether candidates understand how these systems behave in practice.

How strong candidates approach advanced examples

Strong candidates stay calm and explain their reasoning step by step. They acknowledge uncertainty where appropriate and make trade-offs explicit. Interviewers often value this clarity more than any specific architectural choice.

Advanced examples reward candidates who can explain consequences, not just mechanisms.

Data-heavy System Design examples

In data-heavy System Design examples, data modeling decisions dominate the architecture. These examples test whether candidates understand how data shapes scalability, consistency, and coupling. Interviewers often choose data-heavy examples to probe depth early in the discussion.

The hardest part of these examples is rarely infrastructure. It is deciding how data should be structured and owned.

What interviewers focus on in data-heavy examples

Interviewers pay close attention to how candidates identify core entities and relationships. They listen for clarity around ownership, update paths, and consistency guarantees. Systems that allow multiple services to modify the same data directly are viewed as fragile.

Candidates are also expected to reason about access patterns. Whether data is read frequently, written frequently, or both influences nearly every design decision.

| Data concern | Interview focus |

| Entity relationships | Modeling clarity |

| Ownership boundaries | Coupling control |

| Consistency needs | Correctness judgment |

| Access patterns | Performance intuition |

Typical data-heavy System Design examples

Examples such as e-commerce platforms, booking systems, financial ledgers, and analytics pipelines fall into this category. These systems often require careful handling of correctness and concurrency.

Interviewers expect candidates to explain why data is modeled a certain way and how it evolves over time, not just how it is stored.

Why data-heavy examples expose weak fundamentals

Candidates with shaky fundamentals often struggle here because data decisions force clear thinking. There is little room for hand-waving. Strong candidates lean on principles such as single ownership and clear boundaries to guide their designs.

Real-time and low-latency System Design examples

Real-time System Design examples introduce strict latency constraints. Systems such as chat platforms, live collaboration tools, or streaming services require predictable response times and responsive user experiences.

Interviewers use these examples to test whether candidates can reason about latency budgets and trade-offs under pressure.

What interviewers expect in low-latency designs

Interviewers expect candidates to explain where latency comes from and how it accumulates across the request path. They listen for an understanding of trade-offs between consistency, durability, and responsiveness.

Strong candidates explain why certain guarantees may be relaxed to meet latency goals and what users experience as a result.

| Latency concern | What it reveals |

| Critical path reasoning | Performance intuition |

| Trade-off clarity | Practical judgment |

| Backpressure handling | System stability awareness |

Typical real-time System Design examples

Examples in this category include chat applications, collaborative editors, live feeds, and streaming systems. These systems often involve concurrency, ordering, and delivery guarantees that must be balanced carefully.

Interviewers do not expect candidates to solve every edge case. They expect thoughtful reasoning about constraints and user experience.

Common mistakes in real-time examples

A frequent mistake is treating real-time systems like batch systems. Candidates may over-prioritize consistency or durability at the expense of responsiveness. Interviewers prefer candidates who recognize that latency-sensitive systems require different trade-offs.

Platform-focused System Design examples

Platform-focused System Design examples shift attention away from end-user features and toward infrastructure concerns. These examples test whether candidates understand how large systems support other systems. Interviewers use them to assess architectural maturity and operational thinking.

In these examples, the “user” is often another service or team. Success depends on stability, predictability, and isolation rather than visible product functionality.

What interviewers look for in platform designs

Interviewers expect candidates to think in terms of the separation of concerns. Concepts such as control plane versus data plane, configuration management, and isolation boundaries become central. Candidates who blur these responsibilities often produce fragile designs.

Interviewers also listen to how candidates think about the blast radius. Platform systems must fail safely because they support many dependent services.

| Platform concern | What interviewers evaluate |

| Control vs data plane | Operational maturity |

| Isolation boundaries | Risk containment |

| Configuration safety | Change management awareness |

| Dependency management | Reliability thinking |

Typical platform-focused System Design examples

Examples such as CDNs, deployment systems, API gateways, coordination services, and observability platforms fall into this category. These systems often emphasize availability and correctness over raw feature richness.

Interviewers expect candidates to explain how these platforms evolve safely and how they protect downstream systems from failure.

Why platform examples expose senior-level thinking

Candidates who perform well in platform-focused examples demonstrate an understanding that System Design is not only about serving traffic but also about enabling safe operation at scale. This perspective strongly differentiates senior candidates from mid-level ones.

How to adapt System Design examples when interviewers change constraints

Interviewers often change requirements mid-discussion to test adaptability. This is not a trick. It reflects real-world engineering, where constraints change frequently, and designs must evolve.

Strong candidates treat constraint changes as natural extensions of the same problem rather than disruptions.

Anchoring changes to earlier decisions

When constraints change, strong candidates do not restart their design. Instead, they refer back to earlier assumptions and explain how the new constraint affects specific components or decisions.

This approach shows continuity of reasoning and reinforces confidence in the original structure.

Explaining trade-offs during adaptation

Adapting a design requires acknowledging trade-offs. Candidates should explain what improves and what worsens as a result of the change. Interviewers value candidates who communicate these consequences clearly.

A calm explanation of trade-offs signals maturity and experience.

Why adaptability often outweighs initial design quality

Interviewers frequently rate candidates higher for graceful adaptation than for a strong initial design. The ability to adjust under pressure reflects how candidates will behave in real engineering discussions.

Candidates who resist changes or become defensive often struggle at this stage.

Common mistakes candidates make with System Design examples

Jumping into architecture too quickly

A frequent mistake is starting with components before clarifying requirements. This leads to misaligned designs and wasted time. Interviewers interpret this as poor problem framing rather than enthusiasm.

Strong candidates slow down initially to ensure clarity.

Over-designing from the start

Many candidates assume massive scale or complex requirements without justification. This results in unnecessary complexity and signals weak judgment. Interviewers prefer designs that start simple and grow logically.

Over-designing often creates more problems than it solves.

Ignoring failure modes

Candidates sometimes focus entirely on the happy path. Interviewers quickly expose this weakness by introducing failures. Designs that collapse under failure lose credibility.

Strong candidates proactively discuss failure behavior.

| Common mistake | Interview interpretation |

| Skipping clarification | Disorganized thinking |

| Over-engineering | Poor judgment |

| Ignoring failures | Lack of experience |

| Component listing | Shallow understanding |

Treating examples as exams

Candidates who treat System Design examples as tests often become rigid. Interviewers prefer candidates who think aloud, explore alternatives, and adapt collaboratively.

System Design interviews reward conversation, not memorization.

Practicing System Design examples effectively

System Design practice is about reasoning and communication, not correctness or speed. Practicing silently or focusing only on diagrams is insufficient.

Candidates must practice explaining their designs clearly and confidently.

Repetition and refinement over novelty

Solving many different examples once is less effective than solving a shorter set multiple times. Each iteration should improve clarity, structure, and trade-off explanation.

Interviewers notice this refinement during real interviews.

Practicing under realistic conditions

Effective practice includes time limits, interruptions, and constraint changes. This prepares candidates for real interview dynamics and builds composure under pressure.

Practicing with peers or mock interviewers accelerates learning by exposing blind spots.

Using examples as learning tools

The goal of practice is not to memorize solutions. It is to build intuition and confidence. Strong candidates use examples to internalize patterns rather than replicate architectures.

Using structured prep resources effectively

Use Grokking the System Design Interview on Educative to learn curated patterns and practice full System Design problems step by step. It’s one of the most effective resources for building repeatable System Design intuition.

You can also choose the best System Design study material based on your experience:

Final thoughts

System Design examples are not templates to memorize or puzzles to solve. They are thinking tools that help candidates develop clarity, judgment, and adaptability.

Interviewers evaluate how candidates reason under uncertainty, not how many systems they recognize. Candidates who internalize a structured approach, explain trade-offs calmly, and adapt gracefully consistently perform well.

Mastering System Design examples is ultimately about mastering a way of thinking. Once that mindset is in place, unfamiliar problems feel manageable, interviews feel conversational, and System Design becomes a strength rather than a source of anxiety.